About the Customer

The Defense Logistics Agency (DLA), a vital component of the U.S. Department of Defense, plays a critical role in supplying the nation’s military forces and supporting their operations. DLA ensures the availability of necessary resources and materials, spanning across a vast range of logistical needs. The DLA is making investments into leveraging Artificial Intelligence and Machine Learning (AI/ML) for a variety of use cases. DLA’s Procurement Integrated Enterprise Environment (PIEE) is the primary system for DoD procure-to-pay activities.

Customer Challenge

PIEE program leadership was looking to leverage AI and machine learning to drive better decision-making, efficiency, and improve overall performance. PIEE required an AI/ML solution tailored to their unique data and developed to operate within its air-gapped IL5 compliant AWS cloud enclave.

Credence Solution

Credence created an Agentic Chatbot, known as Albert, which is a sophisticated AI-driven platform designed to enhance knowledge for PIEE team members and end users. The solution has an Authority to Operate (ATO) and is operational in the Credence managed AWS IL5 enclave. This solution leverages advanced machine learning algorithms to analyze vast amounts of data, providing actionable insights and predictive analytics. By integrating seamlessly with existing systems, PIEE Albert can process and interpret data from various sources, offering a comprehensive view of organizational performance and potential areas for improvement. The platform’s user-friendly interface ensures that even non-technical users can easily access and understand the insights generated, making it a valuable tool for executives and managers alike.

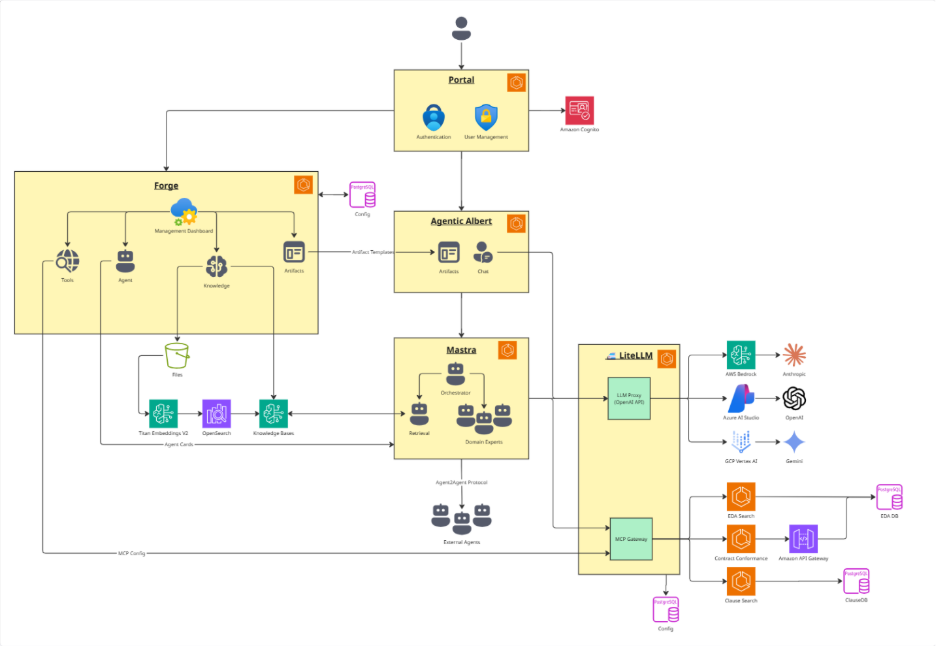

As illustrated in the diagram below, the PIEE Albert architecture integrates user management, agent orchestration, knowledge management, and large language model (LLM) services. The system is composed of several interconnected modules: Portal, Forge, Agenick Albert, Mastra, and LiteLLM. Each module has a specific role in the overall workflow and communicates with other modules through defined interfaces. All components are containerized for deployment to ECS with images stored and scanned in ECR.

At the top of the diagram is the “Portal” module, which handles authentication and user management. It connects to Amazon Cognito for secure access control. Users interact with this portal to gain entry into the system.

The “Forge” module serves as a central hub for managing agents, tools, knowledge bases, and artifacts. It features a Management Dashboard that allows administrators to oversee these components. Files are ingested into a Knowledge Base within Forge for use by agents or other modules.

“Agentic Albert” acts as an intermediary between Forge and Mastra by handling artifact templates and chat functionalities. The “Mastra” module orchestrates agent workflows by coordinating retrieval tasks and domain specific agents both internal or external through Agent2Agent Protocol. Forge provides a low-code interface for modifying internal agent programming via Agent Cards. The culmination of the agentic system is presenting information to the user in a set of bespoke visual artifacts that are also curated through the Forge interface.

On the right side of the diagram is “LiteLLM,” which provides LLM proxy capabilities for supported APIs via an NCP Gateway interface. LiteLLM connects with various external LLM providers such as Anthropic Claude, OpenAI models, Azure OpenAI Service, Google Gemini models via Vertex AI API Gateway—and supports embedding search through Bedrock and OpenSearch, Auroura PostgresSQL databases for context retrieval/confidence scoring.

Overall, this architecture demonstrates how different components work together to enable seamless user authentication/management; agent creation/orchestration; knowledge ingestion/retrieval; artifact generation; chat interactions; and integration with advanced language models—all within a modular enterprise environment suitable for scalable AI applications.

Results and Benefits

One of the key features of Albert is its ability to learn and adapt over time. As it processes more data, the platform continuously refines its algorithms, improving the accuracy and relevance of its predictions. This adaptive learning capability ensures that the insights provided are always up-to-date and reflective of the latest trends and patterns within the organization. Additionally, Albert includes robust data governance and security measures, ensuring that sensitive information is protected and that the platform complies with relevant regulations and standards.

The implementation of Albert for PIEE led to significant improvements in efficiency and effectiveness. By automating routine data analysis tasks, the platform frees up valuable time for team members and end users to focus on more strategic activities. Furthermore, the predictive analytics capabilities of Albert will help PIEE anticipate and mitigate potential risks, leading to more informed decision-making and better overall performance. The solution’s scalability also means that it can grow with the organization, providing ongoing support as the business evolves and expands.

In summary, the Albert Chatbot represents a powerful tool for organizations looking to leverage AI and machine learning to drive better decision-making and improve overall performance. Its advanced analytics capabilities, user-friendly interface, and adaptive learning features make it an invaluable asset for any organization seeking to stay ahead in today’s data-driven world.

About Credence

Credence, an AWS Premier Tier Partner, possesses multiple AWS Competencies and Service Delivery designations, including the Migration and Modernization Consulting Competency, Government Consulting Competency, GenAI Competency (pending approval), and is part of the Managed Services Provider Program, demonstrating our specific expertise in these areas. As a leading Cloud MSP at DLA and across the DoD, Credence has the capabilities and experience of managing the full cloud lifecycle from initial migration to modernization. With a strong foothold in cloud, Credence leverages cutting-edge technologies to deliver AI/ML solutions that catalyze automation and predictive capabilities. Credence’s holistic approach – spanning data engineering, AI, platform engineering, cybersecurity, and operations – establishes the foundation as a trusted partner in driving organizations toward innovation and success in the digital era.