Pravin Muthu | 30 May 2025

Generative AI (GenAI) interfaces are rapidly becoming standard tools for accessing and interacting with information across organizations. However, to ensure responsible deployment, a robust governance framework is essential. This governance must extend beyond the AI models themselves to encompass the underlying datasets that enrich them.

This is particularly critical for organizations like the Defense Logistics Agency (DLA) with its DAAS platform, a key DoD data broker. DLA’s DAAS faces the challenge of serving a multitude of user groups – from individuals seeking answers to general FAQs and external customers requiring specific data, to internal developers building new applications across diverse contexts and use cases. Therefore, a comprehensive framework is needed to deliver these AI-powered capabilities while maintaining secure access through a consistent and trusted interface for all users.

Robust Governance: The Backbone of Trustworthy GenAI

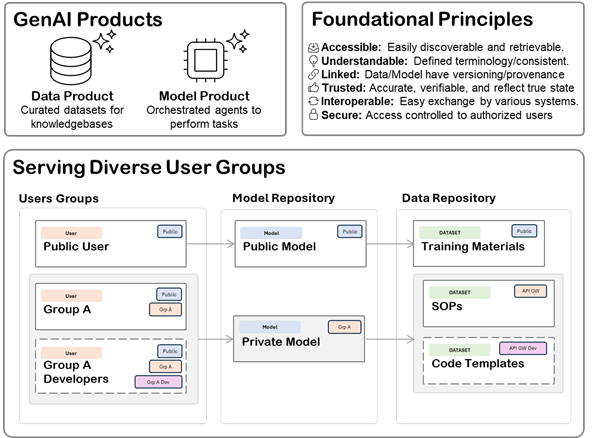

At the core of any GenAI system are Data Products and Model Products, which function as interoperable endpoints allowing for governed connectivity between various components. Data Products are the curated datasets—used for training, fine-tuning, or providing knowledge via Retrieval Augmented Generation (RAG)—that significantly shape a model’s behavior, capabilities, and potential biases. Model Products are the GenAI models themselves, engineered for tasks like question answering or text generation. To facilitate this interoperability and governance, comprehensive documentation is critical: Data Cards detail a dataset’s origins, composition, and intended uses, while Model Cards provide specifics on a model’s architecture, training data, performance, and ethical considerations. This structured documentation forms the basis for these components to connect and operate within a governed framework.

Effective lifecycle management is key for these products, as demonstrated by the governance and immutable records of Model Card versions. This lifecycle includes robust versioning, where a single, authoritative card is kept for each artifact version, often with automated creation and descriptive change notes. Audit trails record all activities including creations, views, edits, approvals, and state changes, providing accountability and a traceable history as a permanent record of the artifact’s development. This meticulous management of versions and changes underpins the governance of the model products.

Our approach is guided by the Army’s Unified Data Reference Architecture (UDRA). UDRA defines data products, and by extension, model products, to follow specific standards. These principles ensure that data and model cards are discoverable, clear, connected, accurate, and protected. Computational governance further strengthens this by using automated methods to enforce policies, ensuring documentation is complete, consistently formatted, and validated based on risk. Moreover, defining Policy-as-Code (PaC) allows for the automatic validation of card content against organizational or regulatory standards, such as the NIST AI RMF, thereby embedding governance directly into workflows and proactively ensuring compliance, transparency, trust, and security.

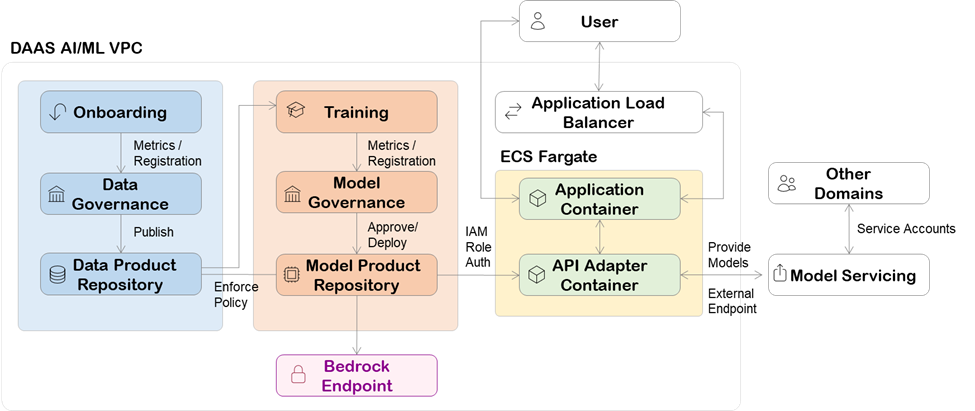

Architecting for GenAI: From Data Ingestion to Model Serving

Our GenAI architecture prioritizes strong Model and Data Repositories. The lifecycle commences with data product generation: data is collected, prepared, documented with a comprehensive Data Card, and then versioned and stored. Through an initial data management and governance process, curated data products are organized and accessible within a central repository for these assets. These data products are subsequently utilized during model development activities to create new models or fine-tune existing ones. Following their development, model products, accompanied by their detailed Model Cards, undergo a rigorous governance procedure for approval. Once approved, they are deployed into a dedicated repository for model products, which also serves as the point from which operational policies are enforced.

The framework uses an API-centric approach to host and serve models. User interactions pass through an Application Load Balancer to containerized apps on AWS ECS Fargate. Central to this is the API Adapter Container, which serves as a key backend component. This API layer handles business logic, orchestrates calls to various GenAI models—whether they are internal custom models or foundation models accessed via AWS Bedrock—and manages interactions with the Model and Data Cards. It provides a secure and governed entry point, and offers a generalized, consistent interface that abstracts the underlying model provider complexities, intelligently routing requests to the appropriate model source.

This architecture is designed for securely serving private models to diverse user groups and across various operational areas within the organization. The API layer secures interactions by providing dedicated endpoints for internally-hosted models. This design allows for the consumption of these private models by other authorized internal applications or service accounts. The framework supports a range of internal stakeholders, including end-users, data scientists, and AI governance officers, by providing strictly controlled access. This access is governed by robust authentication and authorization mechanisms, with Role-Based Access Control (RBAC) as a key component.

Enabling Innovation with Trust

By thoughtfully developing data and model products, wrapping them in robust governance informed by UDRA principles, and supporting them with a secure, scalable architecture like the one outlined, organizations can unlock significant benefits. These include enhanced user efficiency, a reduced burden on support teams, improved user satisfaction, increased accuracy and consistency of information, faster issue resolution, and better overall knowledge management.